As Artificial Intelligence (AI) evolves, its potential to fortify cybersecurity measures has become a focal point for companies worldwide.

With the alarming escalation in cyberattack speed, scale, and sophistication, businesses are increasingly integrating AI technologies to outmaneuver cybercriminals.

How Microsoft and OpenAI Plan to Enhance Cybersecurity With AI

Microsoft has teamed up with AI innovators like OpenAI to explore AI’s role in cybersecurity. Specifically, the companies are looking at how malicious actors misuse AI and how it can be used for defense. Additionally, they aim to promote responsible AI use cases, ensuring that bad actors do not use them for harmful purposes.

Microsoft’s initiative, particularly with its Copilot for Security, exemplifies the proactive measures to empower cybersecurity with AI-driven tools. These efforts align with broader industry mandates, such as the White House’s Executive order on AI. The order calls for stringent safety and security standards for AI systems impacting national security and public welfare.

“As part of this commitment, we have taken measures to disrupt assets and accounts associated with threat actors, improve the protection of OpenAI LLM technology and users from attack or abuse, and shape the guardrails and safety mechanisms around our models,” Microsoft explained.

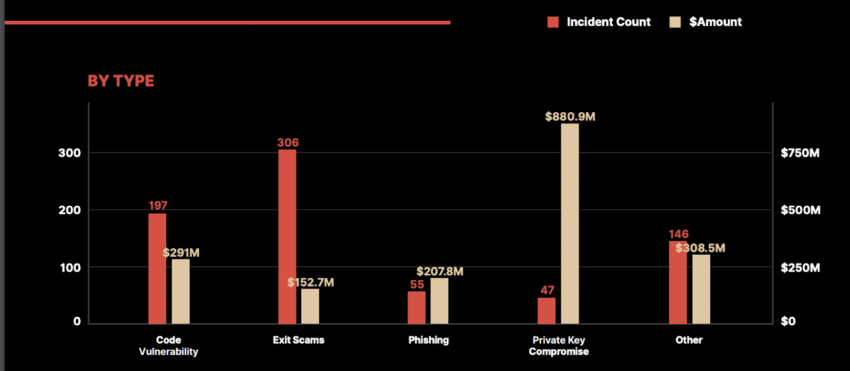

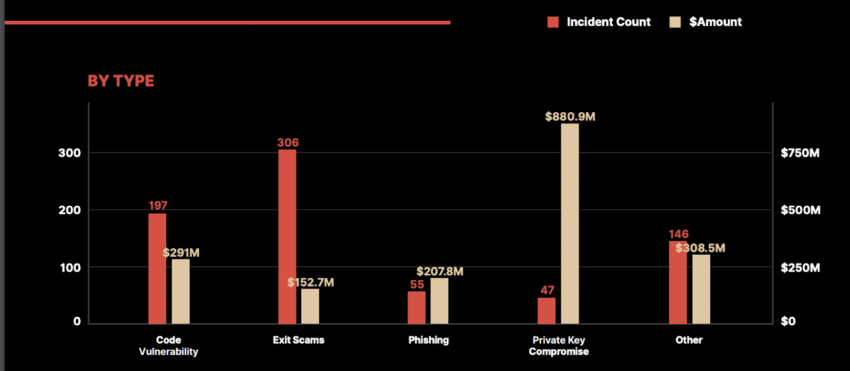

In 2023, the Web3 industry alone witnessed a staggering loss of $1.8 billion across 751 security incidents. These incidents ranged from code exploits and phishing attacks to more complex schemes such as private key compromises and exit scams, with the latter accounting for a significant portion of the breaches.

Read more: AI for Smart Contract Audits: Quick Solution or Risky Business?

The gravest financial toll stemmed from private key compromises, resulting in a colossal $880.9 million loss for crypto firms. Indeed, this vulnerability highlights the critical need for robust security measures in safeguarding sensitive information.

In response to these escalating threats, Microsoft has designed policy actions aimed at disrupting the malicious use of AI by identified threat actors, including nation-state advanced persistent threats (APTs) and cybercriminal syndicates. These principles encompass the identification and neutralization of malicious AI use, collaboration with other AI service providers for threat intelligence sharing, and a commitment to transparency regarding actions taken against threat actors.

Read more: Crypto Project Security: A Guide to Early Threat Detection

Microsoft and OpenAI’s joint defense strategy offers a glimpse into the future of cybersecurity, where AI’s generative capabilities are leveraged to counteract malicious activities. By tracking over 300 unique threat actors, including nation-state actors and ransomware groups, Microsoft Threat Intelligence indeed plays a crucial role in identifying and mitigating AI-facilitated threats.

![]()

PrimeXTB

Explore →

![]()

Wirex App

Explore →

![]()

AlgosOne

Explore →

![]()

YouHodler

Explore →

![]()

KuCoin

Explore →

![]()

Margex

Explore →

Explore more

The post How These Companies Will Use AI to Prevent Cyberattacks appeared first on BeInCrypto.